8:05 PM · 1/17/26 — Contributor Cynthia McCallum dives headfirst into the chaos of AI slop, where most of what’s generated isn’t helpful, harmless, or honest — it’s crafted to mislead. She breaks down the escalating dangers of AI‑driven misinformation and the confusion it leaves in its wake.

There’s a moment, right before a lie becomes a “fact,” when the world briefly hangs in the balance. A moment when people pause, squint, and ask themselves if what they’re seeing is real. That moment used to matter. It used to slow us down. It used to give truth a fighting chance. But in 2026, that moment is gone. We now live in an era where “AI slop” — the flood of low‑effort, auto‑generated images, videos, headlines, and pseudo‑expert commentary — has become so ubiquitous that the pause has evaporated. The lie doesn’t just travel halfway around the world before the truth ties its shoelaces; the lie is already on its third victory lap, selling merch, and booking a podcast tour. And the truth is still looking for its shoes. This is the chaos we’re living in, the chaos we’re voting in, and the chaos shaping our politics, our communities, and our sense of reality itself. Nobody wants to talk about the elephant in the room: AI slop is now influencing political perception, voter behavior, and public trust at a scale we’ve never seen before.

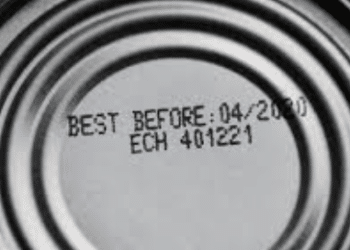

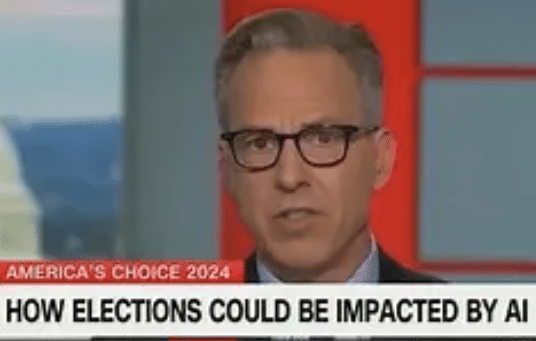

AI slop didn’t arrive with a bang. It seeped in quietly. At first, it was harmless — goofy filters, AI‑generated cats with too many toes, “What if the Founding Fathers were Marvel characters?” content. It was novelty. It was entertainment. It was background noise. Then the tools got better. Then the tools got faster. Then the tools got free. Suddenly the internet became a landfill of synthetic content: AI‑generated news anchors confidently reporting events that never happened, fabricated “breaking news” images during real emergencies, cloned political voices saying things they never said, AI‑written posts pretending to be eyewitness accounts, and auto‑generated “expert analysis” that sounds authoritative but is factually hollow. The volume is overwhelming, the speed is unprecedented, and the believability is dangerous. The average person — the voter, the citizen, the neighbor — is drowning in it.

Politics has always had spin, but spin used to require effort. You needed a campaign, a press release, a spokesperson, a strategy. Now you need a laptop and thirty seconds. AI slop has turned political persuasion into a frictionless, automated, infinitely scalable operation. And it’s not just campaigns doing it — it’s anonymous accounts, foreign actors, domestic trolls, bored teenagers, and people who simply want to watch the world burn. The result is a political environment where false claims spread faster than corrections, fake videos circulate before journalists can verify anything, AI‑generated “evidence” fuels outrage cycles, voters can’t distinguish real statements from synthetic ones, and communities fracture over events that never actually occurred. People aren’t just disagreeing on opinions anymore. They’re disagreeing on reality. And when reality becomes optional, democracy becomes unstable.

One recent example shows how quickly things can spiral. During a high‑profile law enforcement incident, a hyper‑realistic AI‑generated image began circulating within minutes. It showed a scene that looked like a violent escalation — flashing lights, officers in tactical gear, a suspect on the ground. It wasn’t real, but it didn’t matter. People shared it emotionally, not rationally. Local groups erupted. Comment sections exploded. Politicians reacted before verifying. News outlets scrambled to catch up. By the time the truth emerged, the damage was done. The lie had already shaped public perception. This is the new pattern: AI slop fills the vacuum before facts arrive.

Another example involved a synthetic audio clip of a well‑known public official. The voice sounded real. The cadence was perfect. The content was inflammatory. It spread like wildfire because it felt authentic and matched what people were already primed to believe. Only later did analysts confirm it was AI‑generated. But most people never saw the correction. They only saw the lie. And in politics, perception becomes reality.

We’ve also seen AI‑generated “local witnesses” appear during natural disasters. Dozens of posts surfaced online — detailed, emotional, and completely fabricated. They described events that didn’t happen, injuries that weren’t real, and government responses that never occurred. People trusted them because they sounded human. They used regional slang, referenced local landmarks, and mimicked the tone of real community members. But they were synthetic, and they shaped public outrage, charity donations, and political blame. AI slop doesn’t just distort national narratives; it distorts local ones, the ones that hit closest to home.

In another case, a video circulated showing a beloved local figure endorsing a political candidate. The lighting was perfect. The voice was perfect. The gestures were perfect. It was fake. But it influenced voters before it was debunked, and even after the truth came out, some people insisted it was real. Once a lie confirms what someone wants to believe, it becomes sticky. It becomes comfortable. It becomes part of their worldview. AI slop exploits that vulnerability with surgical precision.

People don’t fall for AI slop because they’re stupid. They fall for it because they’re overwhelmed. The human brain wasn’t built for infinite content, infinite speed, infinite manipulation, or infinite synthetic reality. We evolved to trust what we see and hear. We evolved to rely on social cues. We evolved to believe our eyes. AI slop weaponizes those instincts. It creates images that look like evidence, voices that sound like authority, videos that feel like truth, and posts that mimic real people. And because the volume is so high, people stop questioning. They stop verifying. They stop pausing. They just react. And reaction is the fuel of misinformation.

Here’s the part nobody wants to say out loud: AI slop doesn’t need to convince everyone. It just needs to confuse enough people. Confusion is a political weapon. Doubt is a political weapon. Exhaustion is a political weapon. If voters don’t know what’s real, they disengage. If voters don’t trust information, they withdraw. If voters don’t believe anything, they believe nothing — or they believe whatever aligns with their emotions. That’s when democracy becomes vulnerable. Not because people are misinformed, but because they’re disoriented. AI slop is not just misinformation; it’s disinformation at scale. It’s confusion as a service. It’s chaos on demand. And it’s working.

When every image can be faked, every video can be fabricated, every voice can be cloned, and every “fact” can be generated on command, society loses its shared foundation. We stop agreeing on what happened, who said what, what is real, what is trustworthy, and what counts as evidence. And once a society stops agreeing on reality, it stops functioning. AI slop isn’t just a tech problem. It’s a cultural problem, a civic problem, a psychological problem, and a democracy problem. And it’s accelerating.

We’re entering an election year where synthetic videos will look indistinguishable from real ones, AI‑generated political ads will target people with surgical precision, fake scandals will erupt weekly, real scandals will be dismissed as fake, and voters will be manipulated by content that didn’t exist five minutes earlier. The average person — the person who just wants to vote responsibly, stay informed, and make good decisions — will be navigating a minefield of manufactured reality. This is the elephant in the room. This is the crisis nobody wants to name. This is the danger we’re already living in. AI slop isn’t coming. It’s here. It’s everywhere. And it’s reshaping the world faster than we can respond.

So what do we do? We slow down. We question. We verify. We resist the urge to react instantly. We treat every sensational image, video, or quote as suspect until proven otherwise. And we acknowledge, openly and honestly, that we are living in a new era where truth must fight harder than ever. Because if we don’t, the slop wins. The lie wins. The chaos wins. And democracy loses. We want to hear about your AI experiences.