Federal Emergency Management Agency (FEMA) has reactivated their online rumor response website, which they last used after the hurricanes Helene and Milton, in the fall. This is to respond to false claims made about the agency in response to the deadly fires in California.

The agency responded to rumors that surface during major disasters. It clarified whether FEMA’s assistance is limited only to a single payment or not (it’s actually not) and whether submitting to request assistance gives FEMA or federal government ownership or authority over a person’s personal property (it does).

Los Angeles Fire Department also refutes falsehoods while responding to fires. Erik Scott, LAFD Public Information officer, wrote in X to debunk claims that the department was asking for public assistance with wildfires.

Jason Davis, professor of research at Syracuse University who specializes in disinformation detection, says that the rapid and direct response to false allegations reflects a change in how we communicate with the public when disasters strike.

Davis said that the rapid spread and rise of AI generated content has led officials to confront falsehoods more directly.

Davis stated, “In the past the idea was that you should be above misinformation and disinformation. You shouldn’t say anything, because it would give credibility to it.” “That conversation is different because it’s more prevalent and of a higher quality.”

Davis said that this is a common practice. FEMA, American Red Cross and local governments used resource during Hurricanes Helene & Milton to combat misinformation.

Davis said that it is important to address rumors directly, especially when people make critical decisions about their own safety.

He said, “Officials cannot ignore it as there are real consequences.” The stakes are just too high.

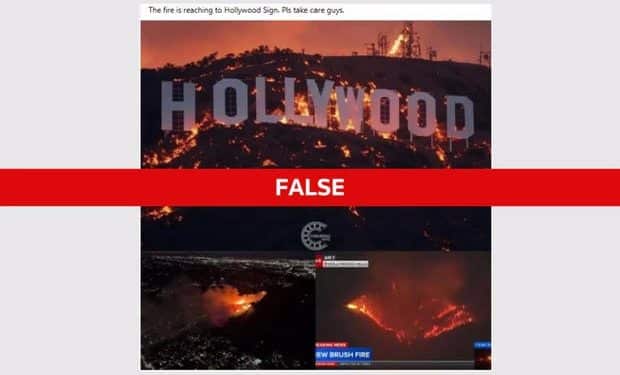

Fake images and recycled conspiracy theories

During the fires, misleading and fabricated images were widely circulated. These included AI-generated videos which appeared to show the Hollywood Sign engulfed by flames. Some were crude while others looked realistic.

Davis said that images of disaster areas are easy to create using AI, because chaos is expected.

He said that people often look to confirm what they already believe.

The most common form of misinformation is footage that has been taken out of context. This includes old videos being presented as brand new, or misinterpretations of certain aspects of a video.

A viral video purporting to show firefighters extinguishing flames with women’s handbags was widely shared during the California fires. According to a LAFD spokesperson the video depicted firefighters actually using canvas bags which are a common tool used for fighting small fires.

Online speculation is rife about the possible causes of the California wildfires, as the cause has yet to be determined.

Videos that mislead have fuelled conspiracy theories, such as the claim that directed energy weapons (DEWs) ignited flames. Similar claims were made and debunked in the 2019 Australia Fires and the 2023 Maui Fires.

Meanwhile, California Gov. Gavin Newsom has launched a page on his site called ” California Fire Facts“, where he responds directly to online allegations.

A viral X message promoted a false claim that Newsom “worked with developers” to rezone burned land in Pacific Palisades so apartment construction could be built. Newsom replied: “This isn’t true.”

What can the public do?

If you suspect something was created by AI, first look for visual clues. AI-generated images are often distorted in the background. The focus is usually on the subject at the foreground. Details like trees and lampposts can be inconsistent or distorted.

The second thing you should do is take a moment to pause and think before sharing videos or information. Verifying the information is important. Consult reliable sources. Davis said that sharing unverified content with friends can give it an unwarranted amount of credibility.